In order to migrate existing data to a new system, we needed to synchronise data between a legacy Microsoft SQL Server (MSSQL) database and our new Postgres database. The first step in this process was to create a Postgres schema (a set of tables) within the new system that mirrors the tables in the old one. Data from the old system is copied over to these tables using SymmetricDS (open source database replication software). The “final hop” was to take the data from the replicated schema and populate the various schemas in our new database (we have about a dozen of them, broken down into logical groupings of tables).

Configuring SymmetricDS

Nodes

SymmetricDS uses a set of nodes which each connect to a database (via JDBC) and communicate with each other using push and pull operations (via HTTPS). Basic node properties are configured using a .properties file that defines the startup parameters.

We use a single SymmetricDS installation, hosting multiple nodes. This means we only need to provision a single docker container running alongside our new system. Because our legacy database isn’t located in the same place as our new one, SymmetricDS must connect to the legacy database using JDBC over the internet. Better performance could be achieved by provisioning two nodes running in separate containers, each connecting to a database using JDBC over a local network, then connecting them together using HTTPS over the internet.

Because we have two database, we need two nodes. One node is configured as the registration server – either node could be used for this purpose. When other nodes are started for the first time, they contact the registration server to join the network. This node is also referred to as the master node.

These nodes are configured by organizing them into groups. Configuration rules are applied to groups rather than individual nodes. This means we can set up a single group for the legacy system and another one for our new system – which contains only our development database initially, with the production database added at a later stage.

Connecting Nodes and Tables

Communication between the nodes is then configured using group links. These define how data moves between the databases. Each link is configured to push or pull data between a source group and a target group.

Routers “ride on top of” group links. They define more specifically which captured data from a source node should be sent to which specific nodes in a target node group.

Channels define logical groupings of tables. These are used to specify which tables are synchronized over the links and routers. A set of channels – (default, reload, .etc) is automatically created that all tables fall into. It’s important to note that if a table contains a column with a foreign key constraint, the table it refers to should also be within the same channel.

Table Triggers configure which tables will capture data for synchronization and the initial load. Triggers must be associated to routers using entries in the SYM_TRIGGER_ROUTER table.

Schemas

SymmetricDS creates its system tables (prefixed with sym_) in the default schema. On both Microsoft SQL Server and Postgres, this is typically set on a per-user basis. We can keep things clean by creating a user on each system for SymmetricDS, with default schemas set appropriately.

By default, data will be copied between schemas with the same names. This can be changed by setting the target_schema_name on the router.

Copying the Data

Creating Tables in the New Database

SymmetricDS can automatically create the tables to copy data into, but because of incompatibilities between SQL Server and Postgres this isn’t reliable. Instead we generate sql to create the tables using the dbexport tool included with SymmetricDS then edit it by hand. The resulting tables have a few important differences:

- Foreign key constraints are removed. These can be added after the initial load, but its important to note that foreign key constraints don’t always hold in SQL Server databases.

- Indexes are removed to improve performance – the majority of these were added to improve performance of the legacy application, so we remove them all and add only the indexes we need.

- To prevent duplicate rows, tables with no primary keys must be either given primary keys or unique constraints containing all columns.

- Some fields were changed from VARCHAR() to TEXT because MSSQL supports larger varchar fields.

Initial Load

After creating the tables, an initial load can be triggered using the symadmin command-line tool.

To copy the data from the legacy-000 node to the node with external id 001:

/opt/symmetric-ds/bin/symadmin --engine legacy-000 reload-node 001Initial Load of a Single Table

If there are problems with the initial load, or if you wish to copy another table to the new database, an initial load of a single table can be triggered.

To copy the data in table_name from the legacy-000 node to the node with external id 001:

/opt/symmetric-ds/bin/symadmin reload-table table_name --engine legacy-000 --node 001Partial Load

You can also copy individual rows from a table.

The following SQL inserts a row into the sym_data table that triggers a reload of all the rows from a single table (like the command above). See the send section in the user guide for more information.

INSERT INTO sym_data (

node_list, table_name, event_type, row_data, channel_id, create_time,

trigger_hist_id

) VALUES (

'001', 'table_name', 'R', '1=1', 'reload', current_timestamp,

(SELECT max(trigger_hist_id) FROM sym_trigger_hist WHERE trigger_id='table_name')

);1=1 is the WHERE clause that is used to select the rows to copy over – it can be changed in order to only copy a few rows from the table.

Verifying the Data

One way to verify that the initial load was successful is to count the rows in the tables:

Postgres

VACUUM ANALYZE;

SELECT

nspname AS schema_name,

relname AS table_name,

reltuples AS row_count

FROM pg_class C

LEFT JOIN pg_namespace N ON (N.oid = C.relnamespace)

WHERE nspname IN ('legacy')

AND relkind='r'

ORDER BY relname ASC;Microsoft SQL Server

SELECT

t.name AS table_name,

s.row_count AS row_count

FROM sys.tables t

JOIN sys.dm_db_partition_stats s ON t.object_id = s.object_id

WHERE t.type_desc = 'USER_TABLE'

AND t.name not like '%dss%'

AND t.name not like 'sym_%'

AND s.index_id IN (0,1)

ORDER BY t.name;The Final Hop

Back to the reason we used SymmetricDS on our project; We needed to get data from a SQL Server Database on Azure into a structurally different database in PostgreSQL, hosted on Amazon RDS. SymmetricDS got us three-quarters of the distance, with a replica of the original database, structurally identical to the original, save for some data type conversions, sitting in its own schema in our target database.

The “final hop” was to take the data from the replicated schema and populate the various schemas in our new database (we have about a dozen of them, broken down into logical groupings of tables).

For lookup tables, the new database uses an integer primary key, rather than a mix of integers and characters, depending in the specific lookup. For the most part, reference data is loaded up into a single lookup table, which supports many types of data. The legacy system took this approach for some tables, and for others had a single table per lookup. There was not clear rationale in terms of which approach was used (and was probably just whatever was easiest at the time). The first stage of the migration process was to populate all the lookup data into the new combined lookup. Each row in the target table contains the name of the original table and its original id. The data for each lookup was migrated using a stored function call something like this:

SELECT "legacy_interface".f_copy_data_to_lib_lookup('lib_advice_list', 'Consent Advice', 'legacy', 'tgCnsntGrndLst', 'CnsntGrndLstCd','CnsntGrndLstCd', 'CnsntGrndLstDesc');The arguments above being target “virtual table name”, a human readable name, what schema to get the data from, with the final three being the id, name and description in the old database. It is worth noting that the original table and column names were all highly abbreviated. There is no need to use short and incomprehensible names in a modern RDBMS (Postgres allows 63 characters for identifiers), so please don’t do it.

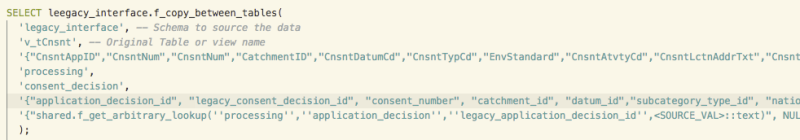

A Postgres stored function was created to migrate data from one table (or view) to another, calling mapping functions along the way (to change column names, swap lookup values, in the case if lookups, etc). This function was called one or more times for each original table, and in some cases against views created on top of the original tables, where the structure did not match.

Fundamentally, we ended up with a bunch of migration calls that look something like this:

It looks a bit ugly, but basically we have the following arguments: Source Schema, Source Table/View, an array of original column names, target schema, target table, an array of target column names, and an optional array of mapping functions to call on each of those columns.

The f_copy_between_tables function moves and maps the data and also adds comments to the target table and columns explaining where the data was sourced from. The idea was it would be a bit easier to read and modify than purely using INSERT INTO… SELECT FROM statement

Fundamentally, we could have done the final hop using SymmetricDS, but using our own stored functions gave us an easy mechanism to do so, which we knew had all the flexibility we needed. In the end, the migration is a mix of calls to the copy stored function and some INSERT INTO… SELECT FROM statements (also using the various mapping stored functions).

Banner image: Photo by Emiliano Arano