You know that moment when Netflix suggests exactly the movie you feel like watching? Or when your food app seems to know you're about to cave in for a late-night snack? It almost feels spooky, right? Like, how did it know?

That seemingly magical moment has a very practical explanation. It's about how tech pays attention to the tiny clues we leave behind. The clicks. The pauses. The time of day. Even whether we're at home, at work, or out in the rain.

These little clues are called behavioural and contextual signals. And together, they shape what we see and how we experience the digital world. Sometimes it feels helpful, sometimes it feels creepy. But either way, it's happening all the time.

Over the last decade or so, what we call "personalisation" has quietly become something way more sophisticated. Traditional personalisation remembers what you've done before. But hyper-personalisation reads details in real-time, combining multiple signals to tailor experiences to this exact moment. The distinction matters because we're increasingly designing systems that don't just remember preferences, they actively adapt to what's happening right now.

What is personalisation in UX design?

Let's keep it simple.

Behavioural signals are what we do, how we scroll. What we click. How long we hang around on a page before giving up. It's like our digital body language. The Nielsen Norman Group calls this "behavioural research" and distinguishes it from what people say they want versus what they actually do.

Contextual signals are what's happening around us. The time, the place, the device, the mood of the moment. Reading news on your phone at midnight isn't the same as reading it on your laptop during a busy morning. Research in the Journal of Ambient Intelligence and Humanised Computing shows that context-aware systems can improve user experience by adapting to these environmental factors in real-time.

One on its own doesn't tell much. But stack them together, and they start painting a picture of what we might want or need right now.

How behavioural data improves user experience

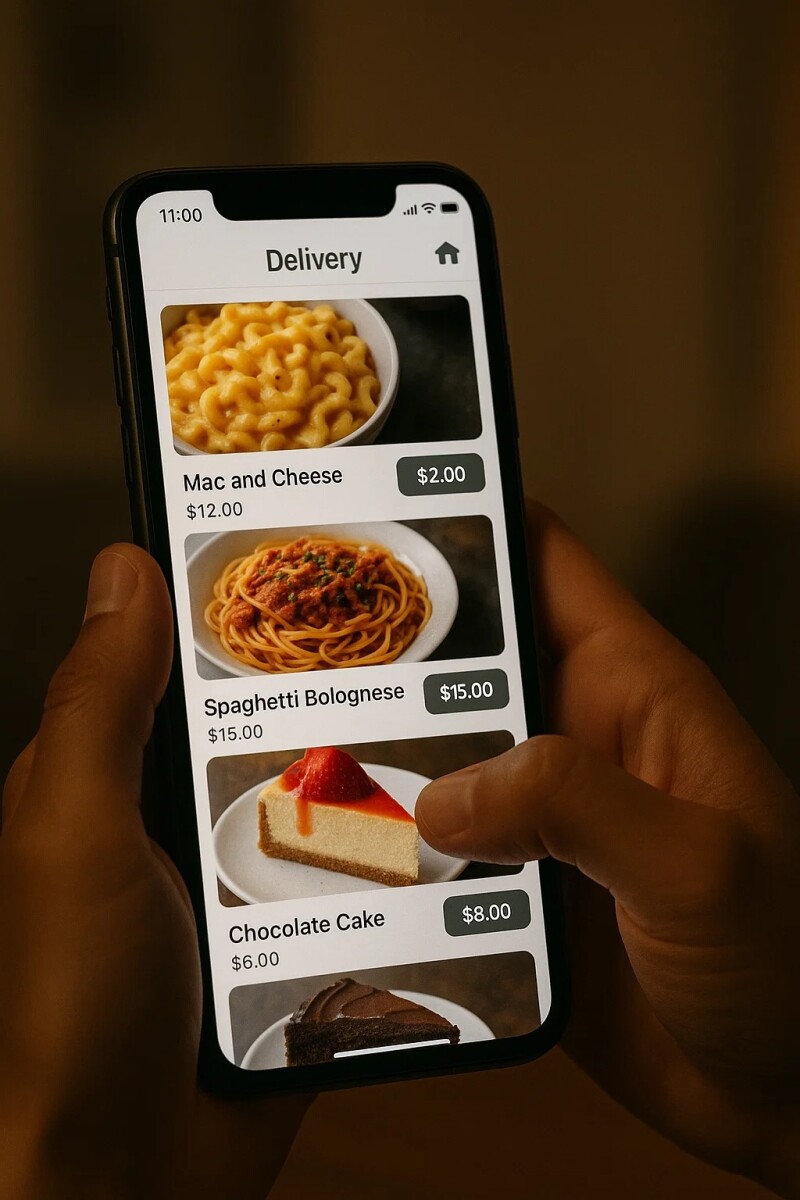

Picture someone scrolling slowly through a food app at 11pm on their phone. That person lingers on comfort food photos, maybe hovers over a few "add to cart" buttons without clicking. Their location shows they're at home, not at the office. The app starts connecting these dots. Late evening plus slow browsing plus comfort food interest plus home location. Suddenly, those cozy pasta dishes and desserts float to the top of their feed.

Each signal alone doesn't mean much. Maybe they stopped scrolling because their dog just knocked over a glass of water. Maybe they're at home but hosting a dinner party. But when multiple signals align, patterns emerge. The app makes an educated guess that this person might want something indulgent and easy tonight.

Now imagine a different scenario. Someone's bouncing quickly through help pages during work hours, their mouse movements sharp and impatient. They're clicking around like they're in a hurry, maybe even showing signs of frustration in how they navigate. That's a signal stack pointing toward urgency. Instead of serving up more self-service articles, the system might prioritise offering live chat support.

When behaviour and context line up like this, tech starts feeling like it's actually paying attention. But of course, this raises questions about privacy and boundaries that we can't ignore.

Are Behavioural Signals in UX Helpful or Invasive?

This is where things get interesting, and where we need to be most thoughtful.

Even in fitness apps, signals create these moments of apparent mind-reading. If your wearable shows stress levels are high and you've been skipping workouts, the app might gently nudge you toward something different. Maybe yoga instead of another punishing HIIT session. Maybe a walking meditation instead of a 10K run. The app isn't reading your mind, it's reading your data and making an informed guess about what might actually help.

But here's the thing. Every time we use these signals, we're walking a line between helpful and invasive. That comfort food suggestion might feel caring one night and manipulative the next. The fitness app's gentle nudge could be exactly what someone needs, or it could feel presumptuous about their mental state.

Why UX Personalisation Signals Matter

When it's done well, signals make experiences easier, bringing the right thing at the right time. They can be more inclusive too, like automatically switching to bigger text when you're tired, or offering voice help when the room is too noisy to read. People return to apps that feel personal, and there's something almost like digital care in the way a boarding pass surfaces at the airport right when you need it.

A 2021 McKinsey study found that companies excelling at personalisation generate 40% more revenue from these activities than average players. But the real win isn't just business metrics. The best implementations feel supportive rather than manipulative. They amplify human needs instead of exploiting them.

Privacy concerns with personalised apps

Of course, it can go wrong in ways that matter.

Sometimes signals get misread entirely. That slow scrolling through the food app? Maybe the person was actually comparison shopping for a dinner party, not craving comfort food. Now they're stuck in an algorithm bubble of indulgent suggestions when they wanted fresh, impressive options.

Then there's the creep factor. That "how did they know?" moment can easily tip into "why are they spying on me?", especially when people don't understand what data is being collected or how it's being used. Location data, personal signals, biometrics, these are sensitive. Mishandle them and you lose trust in an instant.

Common privacy concerns include:

- Location tracking without clear consent

- Behavioural profiling that feels invasive

- Data sharing with third parties

- Lack of control over personal information

- Unclear data retention policies

Algorithms also love to box us in. They keep serving up more of what we already clicked, which can narrow our world instead of widening it. The person who watched one true crime documentary suddenly gets trapped in an endless feed of murder mysteries, missing out on the comedy special they might have loved. Recent research on personalised user experiences highlights how this "filter bubble" effect can limit exposure to diverse content, even when users would benefit from variety.

Best practices for ethical personalisation

If we're designing with signals, there are some guardrails worth holding onto.

Being open about what's happening helps build trust. A simple "We noticed you like dark mode at night" or "Based on your recent activity" gives people context for why they're seeing what they're seeing. Nielsen Norman Group research emphasises the importance of this transparency, noting that users prefer customisation (where they control settings) over pure personalisation (where the system decides for them).

People should have real control too, not just the illusion of it. Give them ways to turn personalisation on or off, or to course-correct when the algorithm gets it wrong.

Key principles for responsible personalisation:

- Start with user consent and clear communication

- Provide meaningful control options

- Design for diversity from the start

- Test with different user groups

- Prioritise user benefit over business metrics

- Build in feedback mechanisms for course correction

Personalisation should grow step by step, not jump straight into "we know everything about you." Start small, earn trust, then gradually get more sophisticated. And stay honest about intent. Don't use signals just to push business goals. Ask whether what you're building actually makes someone's life better.

Design for diversity from the start. Test with different kinds of people so your signal interpretation doesn't just work for one narrow group. What feels helpful to a busy parent might feel overwhelming to someone with anxiety. What works in one culture might completely miss the mark in another.

Real-world examples of behavioural signals

Netflix constantly tweaks recommendations, and even the artwork you see changes depending on what you've watched. Show the same movie to a comedy lover and a drama fan, and they'll see completely different poster images.

Google Maps layers in traffic, time, weather, and your personal travel patterns to suggest routes that feel almost psychic in their helpfulness.

Amazon blends your browsing history with seasonal events, recent purchases, and even what other people in your area are buying to surface that perfect "next thing."

Spotify doesn't just track what you listen to, but when and where you listen. Their "Car Thing" (recently discontinued) detects when you're driving and automatically surfaces bigger buttons, simpler interfaces, and hands-free options. Your music app literally adapts to the fact that your hands are on the wheel and your eyes should be on the road.

LinkedIn watches how you interact with posts (do you read the full article or just scroll past?) to determine what content to show you next.

These aren't far-off ideas. They're already woven into our everyday digital experiences, quietly shaping what we see and how we interact with technology.

The Future of Behavioural Signals in UX

The next wave might be emotional signals. Imagine an assistant that picks up on tension in your voice and responds more gently. Or a learning app that notices you're bored (maybe through camera data showing you're looking away, or through interaction patterns that suggest disengagement) and shifts gears to something more engaging.

Recent research on AI-driven behavioural analysis explores exactly these possibilities, examining how systems can detect emotional states through interaction patterns and adapt accordingly. It could feel deeply human and supportive. Or unsettling and invasive. Maybe both. Once again, it comes down to trust, intent, and whether we prioritise empathy over exploitation.

Which brings us to the questions that really matter: Which signals are genuinely helpful, and which just cross the line? How do we avoid leaving people out while tailoring for the individual? Should users have more control, like a comprehensive dashboard for personalisation settings? And who gets to decide what's "okay": us as designers, our companies, or regulators stepping in with new rules?

The answers aren't easy, but they're worth wrestling with because they shape how human our digital future feels.

Key Takeaways: Behavioural Signals and UX Design

Behavioural and contextual signals are shaping the way technology feels, making it less static and more responsive. Done with care, they make life smoother and more personal. But as research consistently shows, they can also misfire, create filter bubbles, or push past boundaries we didn't agree to.

That's why empathy matters in how we build these systems. That's why clarity matters in how we communicate what we're doing. The real test is whether these signals support us, or use us.

Because in the end, personalisation isn't about the data. It's about the person behind it. And if we keep that human at the centre of our thinking, maybe the future of UX won't just be smarter. It'll feel a little more human, too.