Many AI adoption efforts fail not because the technology is weak, but because organisations misunderstand how AI can be introduced into real businesses. The issue is rarely the model. It is the thinking, or lack of time given to thinking, around ownership, data, risk, decision making, and experimentation.

We often see a weak culture of experimentation, where teams expect results on the first attempt and have little room to test, fail, and share what did not work. At the same time, some organisations try to “enable” AI by pre-approving every model or licence available, hoping optionality will create momentum. In practice, progress tends to come from clear guardrails, structured education, and explicit permission to experiment first, then investing more broadly once learning is grounded in reality.

A familiar pattern plays out. Leadership feels pressure to act. An AI initiative gets funding. A tool is selected. Early results look promising. Then progress slows. Trust drops. The work quietly stalls and gets labelled as “not right for us”.

What failed was not AI itself. It was the approach.

Treating AI adoption as a shortcut

AI is often framed as a faster path to efficiency or cost reduction.At board level, there is often strong enthusiasm to get on board with anything labelled “AI”, fuelled by success stories and competitive noise. This can create a kind of technical FOMO, where the pressure to act builds faster than the clarity needed to act well.That idea is appealing, especially when teams are stretched. The reality is less forgiving.

AI systems sit on top of existing processes, data, and behaviours. They do not replace them. If decision making is unclear, AI will repeat that uncertainty at speed. If processes differ between teams, AI will reinforce those differences rather than smooth them out.

This is why solid engineering foundations matter more than ever! Without clear system boundaries and well understood data flows, AI simply accelerates existing problems instead of fixing them. This view is also reflected in guidance from the OECD, which positions AI as an organisational capability rather than a standalone tool.

Starting with tools instead of problems

A common starting point sounds harmless enough: “we should be using AI”. The missing part is the reason.

When teams begin with tools, the problem often bends to justify the choice rather than being defined on its own terms. Energy goes into making a use case fit instead of questioning whether it matters.

This is not a technology failure. It’s a discovery failure. The same thinking that underpins good product work applies here too. Understand the user, the decision, and the outcome before deciding how to build.

Frameworks such as the AI Risk Management Framework from NIST reinforce this point, placing problem definition and context ahead of technical design.

AI works best when applied to clearly understood problems with known constraints and consequences. That does not mean the path to clarity has to be linear. Sometimes it is right to re-map the process, take a thin slice of a potential solution, and move it into early stage production purely to learn. Used this way, techniques like vibe coding can support interactive discovery and fast feedback, not as a shortcut to ship production software, but as a way to pressure test ideas before committing properly.

Overestimating AI readiness and data quality

Most organisations know their data is not in great shape. That reality shows up quickly once you look closely. Basic fields are defined multiple ways. Gender appears as male or female, M or F, 1 or 2. Geographic boundaries change over time so that “Auckland” means different things across datasets. A customer is defined differently across systems. Timestamps vary in format. Columns like "legacy_flag_2 exist", but no one knows why. Poor metadata makes even simple search and discovery harder than it should be.

Data that works for reporting often fails when used to support decisions. Definitions vary and context is missing. Important knowledge lives outside systems. Older data reflects ways of working that no longer exist.

AI doesn’t compensate for these gaps, but absorbs them. Bias and inconsistency become part of the output and by the time problems appear, confidence has already taken a hit.

Strong AI adoption starts with understanding what data should be used, what should not, and why.

Underestimating organisational change

AI changes how work feels, not just how it flows. Alongside enthusiasm, organisations often see a split response. There are keen early adopters and quiet super users, but also people who feel threatened, others who dismiss it as another tech bubble, and some who try it briefly, hit friction, then fall back to familiar habits. Without deliberate education and support, adoption fragments and tools sit half used.

People worry less about job loss and more about accountability. If AI suggests a decision, who owns the outcome? Who explains it to stakeholders? Who carries the risk when it goes wrong?

These questions sit outside the model itself. They live in governance, culture, and ways of working. Ignore them and AI becomes a trust problem rather than a technical one. In the worst cases, organisations introduce what has been described as “workslop”, a flood of low quality AI generated output that creates noise, not value. Instead of 10x productivity, AI can become a Trojan horse in an underprepared organisation, quietly undermining focus, judgement, and confidence.

Assuming AI replaces thinking

One of the most risky assumptions is that AI reduces the need for judgement. In truth however, it increases it.

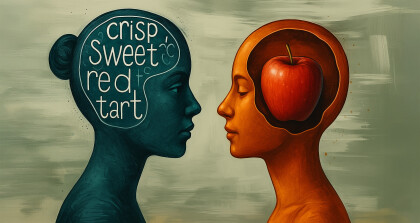

AI is good at recognising patterns and suggesting options. It is not good at understanding trade offs, organisational context, or long-term consequences that sit outside the data.

Strong teams treat AI outputs as inputs. They question them, test them, and decide when not to follow them. That requires confidence and domain understanding, not blind trust.

Leaving security and privacy too late

Many AI initiatives begin informally. Someone tests a tool. A dataset gets uploaded. It feels harmless at the time.

Once real data and real decisions enter the picture, the risk changes. Information can leak, models can retain data unexpectedly, and regulatory obligations can be breached without obvious warning.

In practice, the bigger problem is not security or privacy themselves, but when they appear late as blockers. That moment creates tension, slows momentum, and puts people in impossible positions. Privacy and security are far easier to design in than retrofit, which is why they work best as early constraints. Involving privacy and security leaders from the start, and inviting them into experiments and process design, turns them from a final hurdle into active contributors and avoids painful corrections later on.

Measuring the wrong outcomes

Early AI projects are often judged by how impressive they look, or by what they have not cost. Demos land well. Something appears to have been done faster and cheaper than anyone thought possible. Usage numbers rise and leadership feels reassured.

What is easier to miss is that long term realities have not disappeared. Documentation, supportability, and maintainability still matter. When those are deferred, early wins can quietly turn into future drag.

Meanwhile, the underlying work remains unchanged. Decisions take just as long, errors persist, and with time, people quietly revert to familiar tools.

Real value shows up elsewhere. Better decisions. Fewer reworks. Time saved where it matters. Confidence in outcomes.

A slower start leads to better AI outcomes

The organisations that succeed with AI tend to slow down at the start. They invest time in understanding the problem, the data, and the people involved. That work creates options rather than locking in early choices.

AI adoption works best when treated as a product, engineering, and systems challenge, not a plug-in feature. The goal is not to use AI. The goal is to make better decisions, more reliably, in a way people trust.

Clarity beats speed.

Good questions beat clever tools.